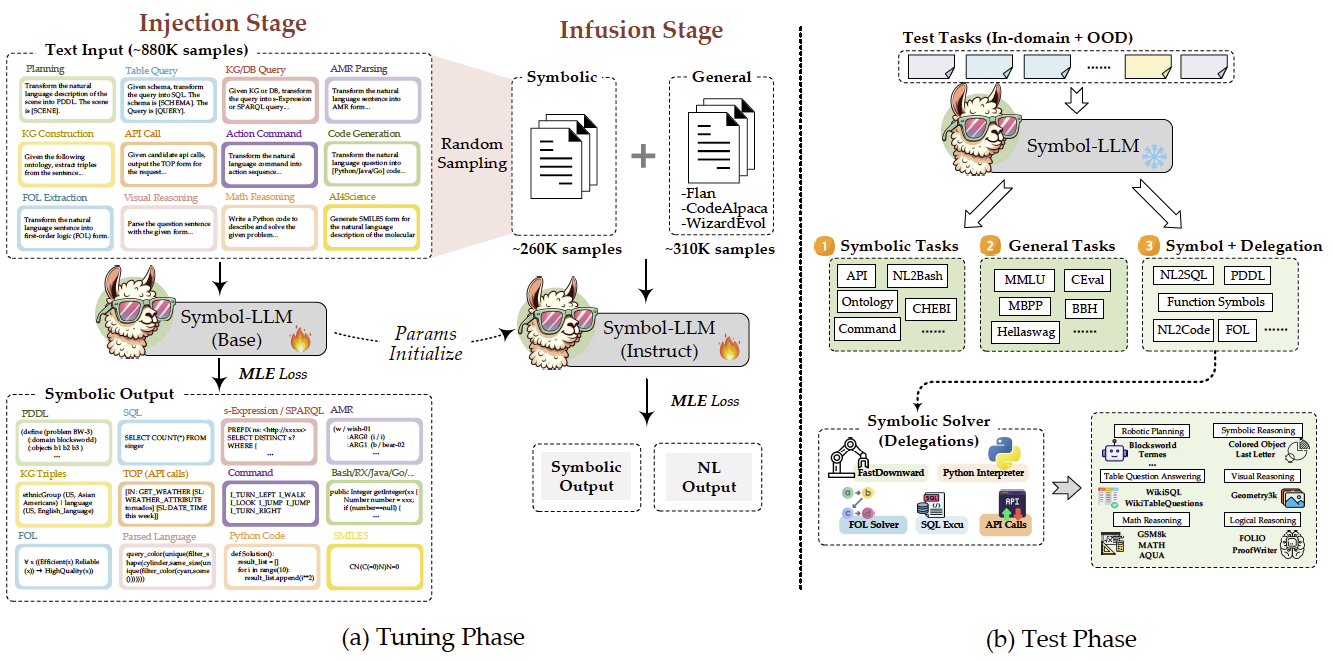

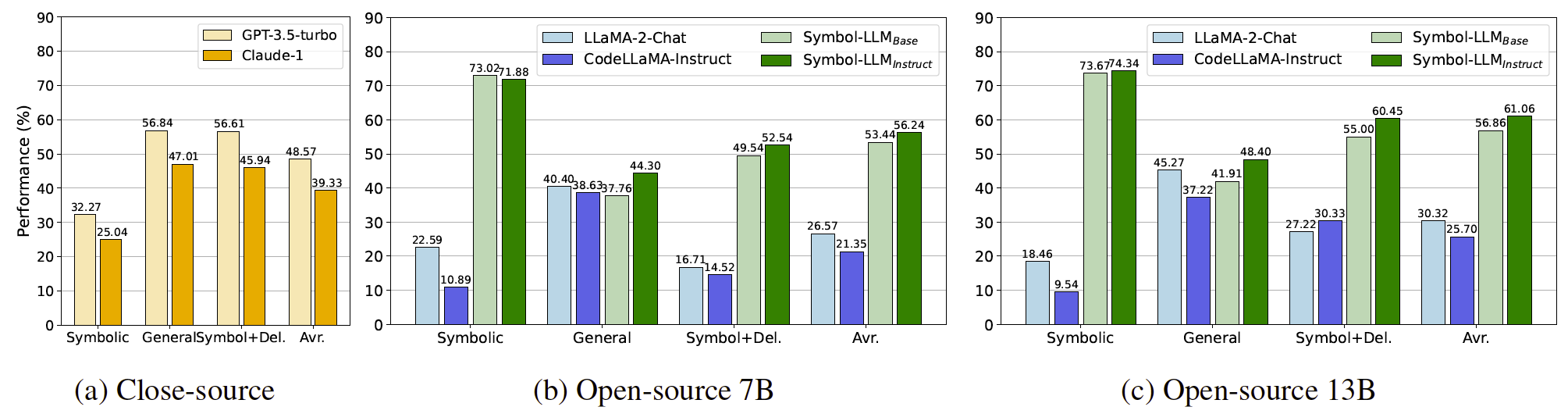

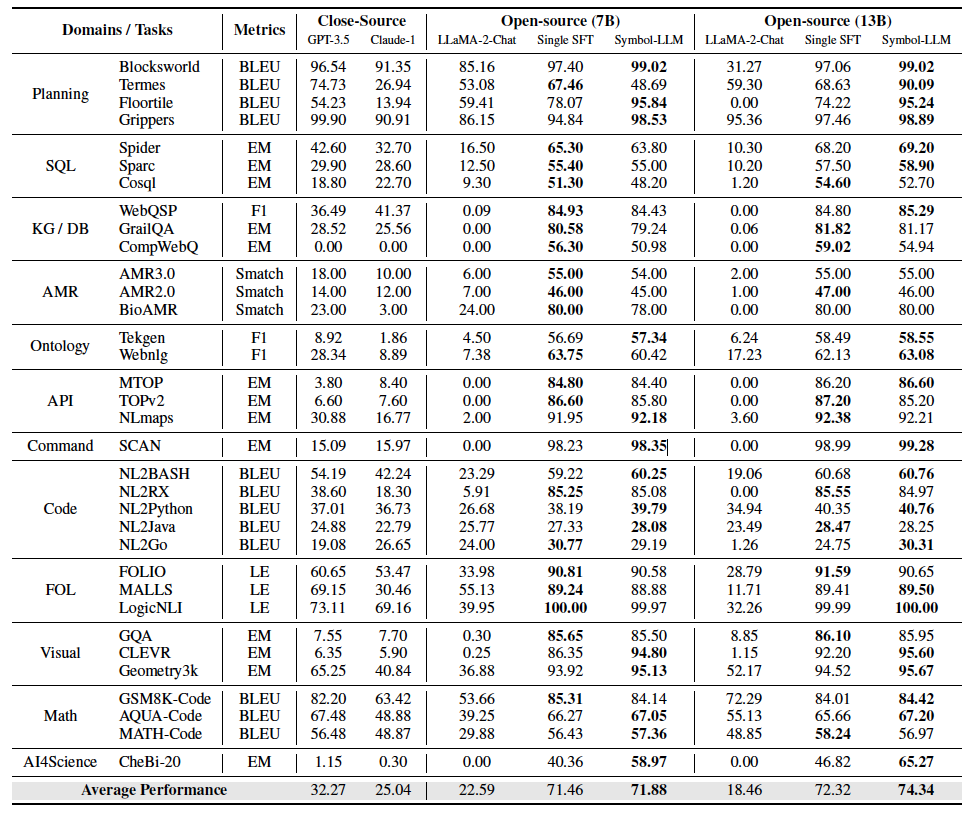

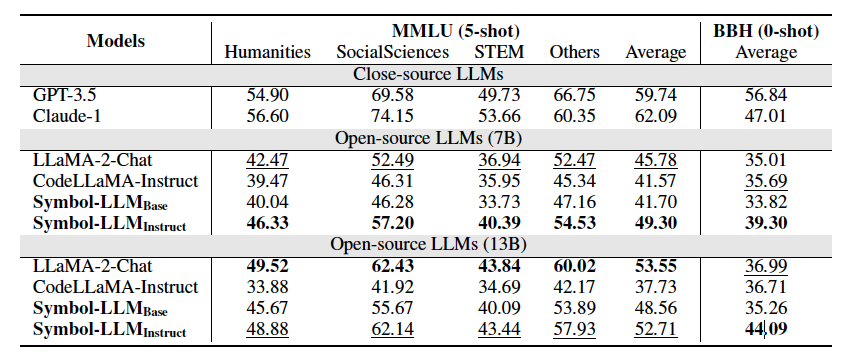

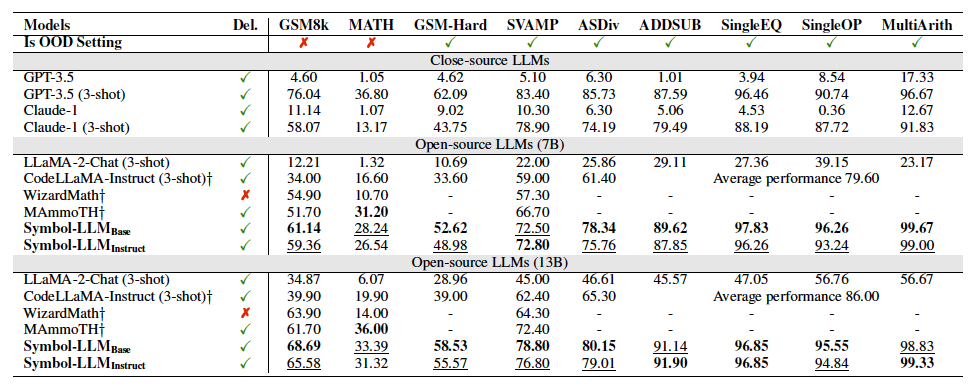

Large Language Models (LLMs) have greatly propelled the progress in natural language(NL)-centric tasks based on NL interface. However, the NL form is not enough for world knowledge. Current works focus on this question by injecting specific symbolic knowledge into LLM, which ignore two critical challenges: the interrelations between various symbols and the balance between symbolic-centric and NL-centric capabilities. In this work, we tackle these challenges from both a data and framework perspective and introduce Symbol-LLM series models. First, we collect 34 symbolic tasks, covering ~20 different forms, which are unified to capture symbol interrelations. Then, a two-stage tuning framework succeeds in injecting symbolic knowledge without loss of the generality ability. Extensive experiments on both symbol- and NL-centric tasks demonstrate the balanced and superior performances of Symbol-LLM series models.