🐯 About Me

I am Fangzhi Xu (徐方植), a final-year PhD student in Xi’an Jiaotong University, major in Computer Science, advised by Prof. Jun Liu. I am currently a research intern at ByteDance Seed. I was previously a research intern at Shanghai AI LAB, supervised by Dr. Zhiyong Wu. I also visited Nanyang Technological University (NTU, Singapore 🇸🇬) for one year, advised by Prof. Luu Anh Tuan.

I have published researches in some top-tier conferences and journals, such as ICLR, NeurIPS, ACL and IEEE TKDE. Also, I serve as a PC member (or reviewer) for ICLR, NeurIPS, ICML, ACL, EMNLP, NAACL.

Research Interests

In general, my research interests lie in large language models, self-improvement and GUI Agents.

Previously, I mainly work on how to build logic-enhanced language models:

- Logical Reasoning: PathReasoner(ACL’24), Evaluation(IEEE TKDE), Logiformer(SIGIR’22), TaCo(IEEE TNNLS)

Currently, my researches focus on building self-improving LLM Agents capable of interacting, reasoning and acting. This encompasses a variety of topics, including self-improvement and autonomous (GUI) agents, and more:

- LLM Self-Improvement: Genius(ACL’25), ENVISIONS(ACL’25), Symbol-LLM(ACL’24)

- LLM Reasoning: $\phi$-Decoding(ACL’25), MUR(Preprint)

- [Major] Multimodal Autonomous Agents: OS-Atlas(ICLR’25 Spotlight), SeeClick(ACL’24), OdysseyArena(Preprint), TIDE(Preprint)

🔥 News

- 2026.02: Our works on evaluting long-horizon, inductive agentic tasks OdysseyArena and agentic test-time improvement TIDE are released !

- 2026.01: Our paper [ScienceBoard] is accepted by ICLR 2026 🎉🎉!

- 2026.01: Our paper LogicEval @IEEE TKDE is recognized as ESI Highly Cited Paper 🎉🎉!

- 2025.11: Our work [MAPS] is accepted by AAAI 2026 🎉🎉!

- 2025.09: Our work [ChartSketcher] is accepted by NIPS 2025 🎉🎉!

- 2025.07: Our training-free work [MUR] on LLM Reasoning is released 🎉🎉!

- 2025.06: Our paper [ScienceBoard] is accepted by WCUA@ICML 2025 as oral paper 🎉🎉!

- 2025.05: Seven papers are accepeted by ACL 2025 🎉🎉!

- 2025.01: Our paper [OS-Atlas] is accepeted by ICLR 2025 (Spotlight) and [R3-V] is accepted by NAACL 2025 🎉🎉!

📖 Educations

- 2021.09 - 2026.06 (expected), M.S. + Ph.D Student, Computer Science, Xi’an Jiaotong University.

- 2017.09 - 2021.06, B.S. in Electrical Engineering, Xi’an Jiaotong University. GPA:97.62/100, Rankings:1/365

💻 Internships

- 2026.01 - Now, Intern @ ByteDance Seed 🇨🇳. Focus on GUI Agents at UI-TARS Team.

- 2025.03 - 2026.02, Visiting Ph.D Student @ NTU 🇸🇬. Focus on LLM Reasoning and Self-Improvement.

- 2023.07 - 2025.02, Research Intern @ Shanghai AI LAB 🇨🇳. Focus on LLM, GUI Agents.

📝 Selected Publications

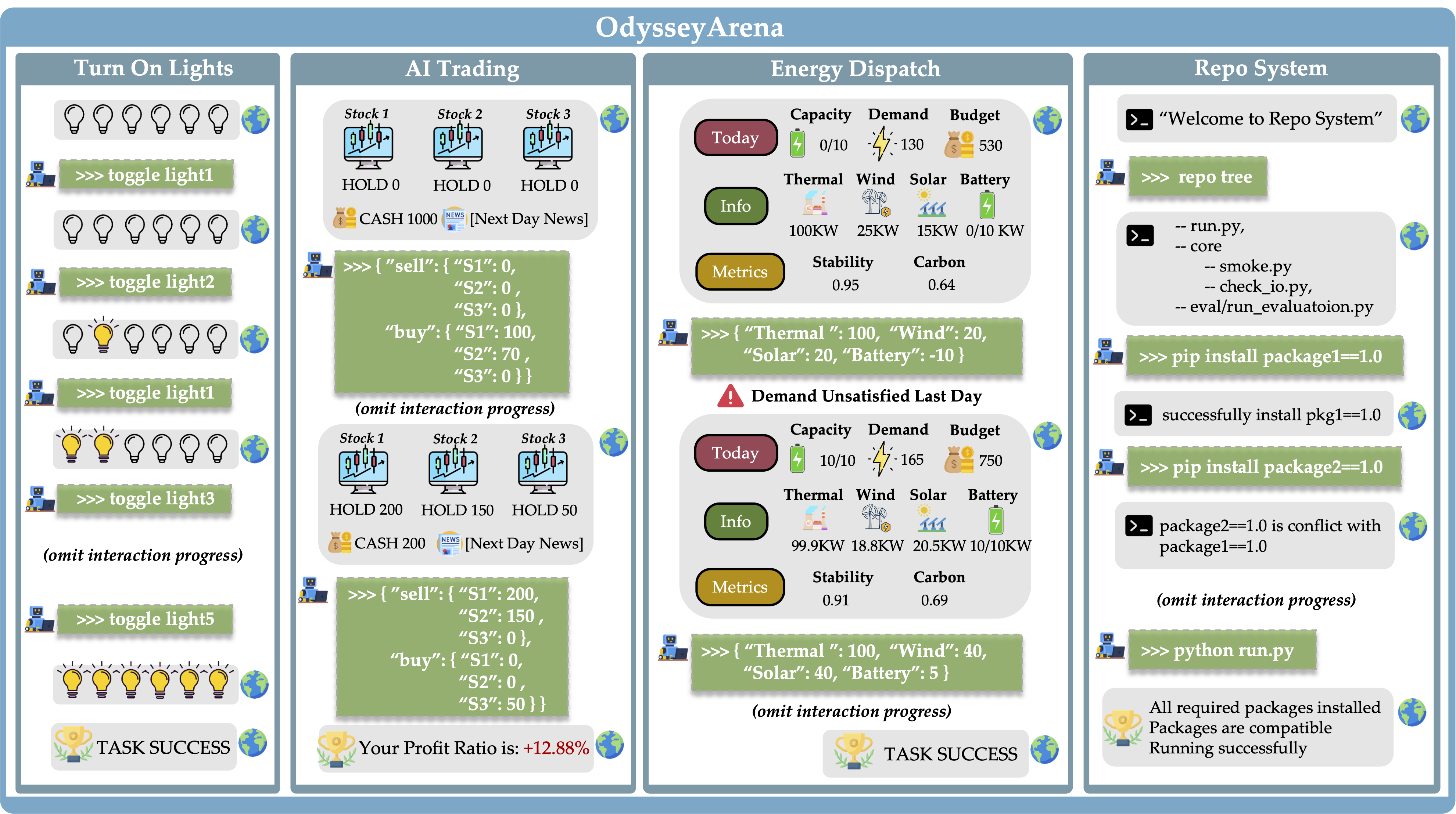

OdysseyArena: Benchmarking Large Language Models For Long-Horizon, Active and Inductive Interactions 🔥🔥

Fangzhi Xu*, Hang Yan*, Qiushi Sun*, Jinyang Wu, Zixian Huang, Muye Huang, Jingyang Gong, Zichen Ding, Kanzhi Cheng, Yian Wang, Xinyu Che, Zeyi Sun, Jian Zhang, Zhangyue Yin, Haoran Luo, Xuanjing Huang, Ben Kao, Jun Liu, Qika Lin✉

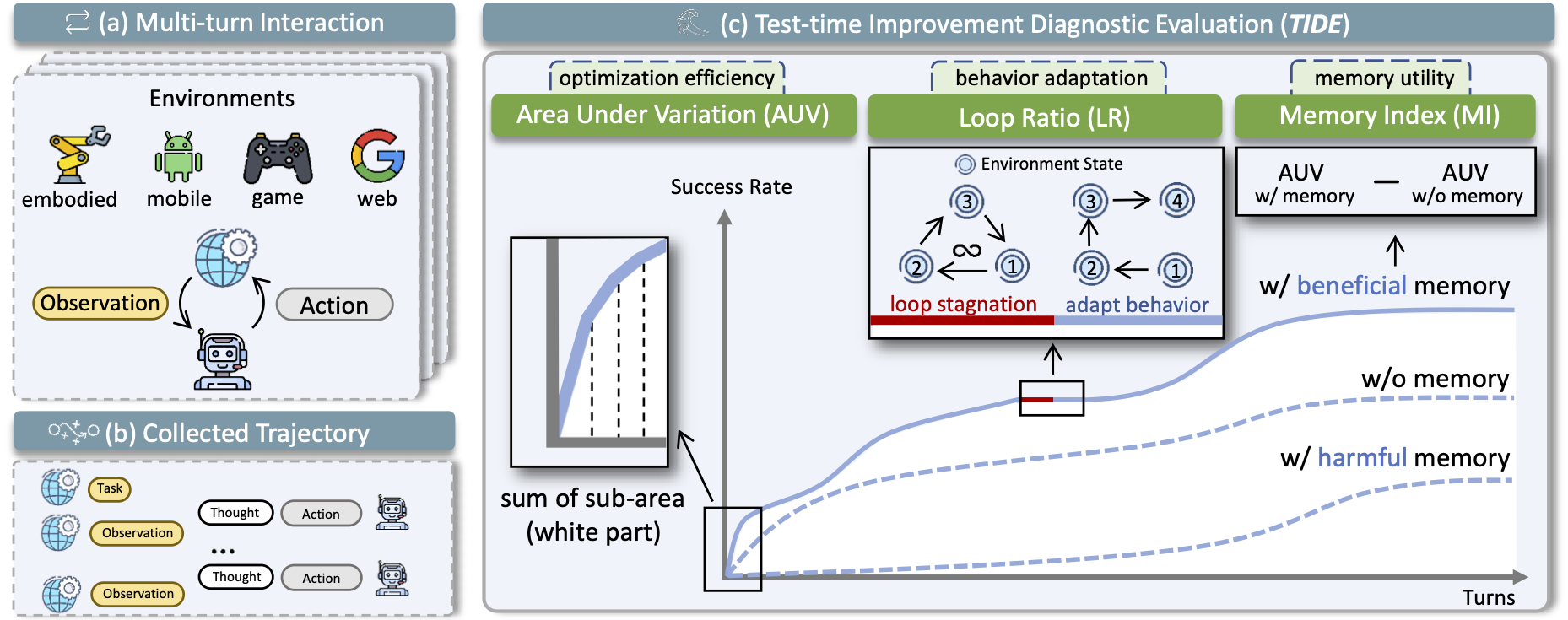

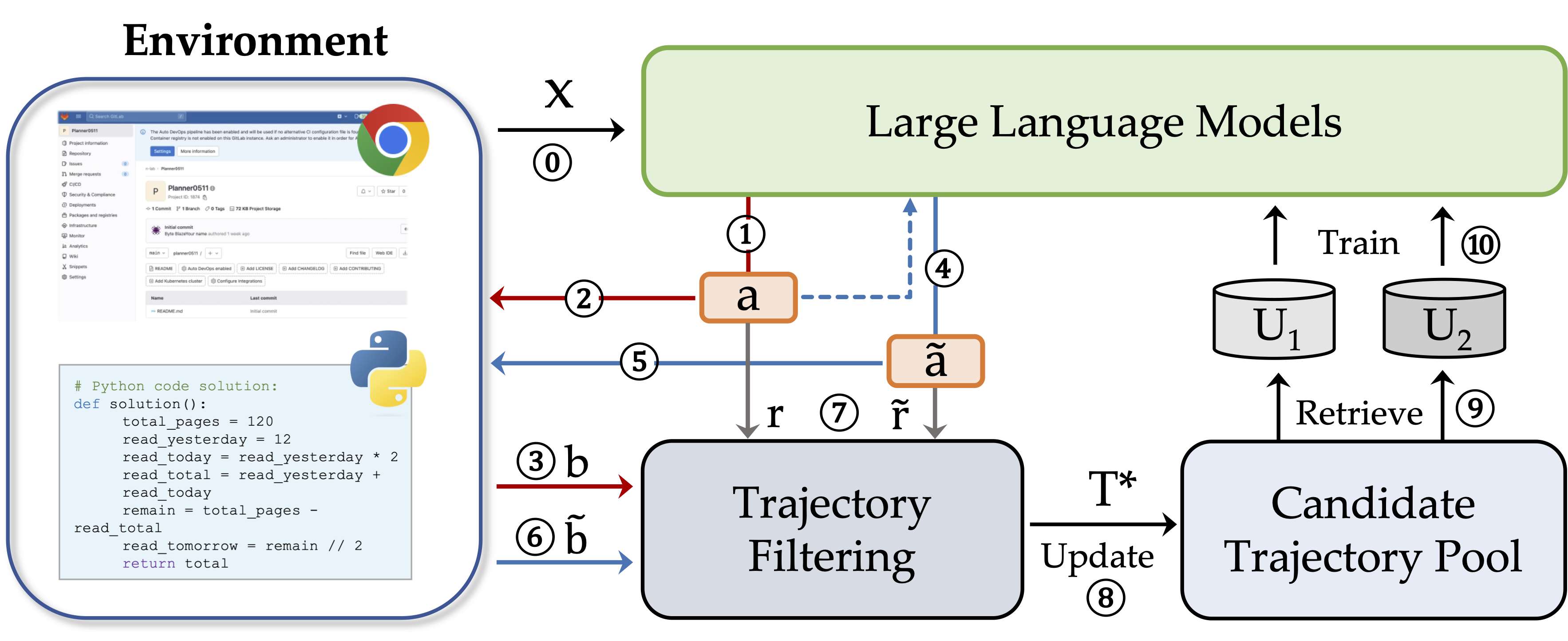

TIDE: Trajectory-based Diagnostic Evaluation of Test-Time Improvement in LLM Agents 🔥🔥

Hang Yan*, Xinyu Che*, Fangzhi Xu*✉, Qiushi Sun, Zichen Ding, Kanzhi Cheng, Jian Zhang, Tao Qin, Jun Liu, Qika Lin✉

(* means equal contributions)

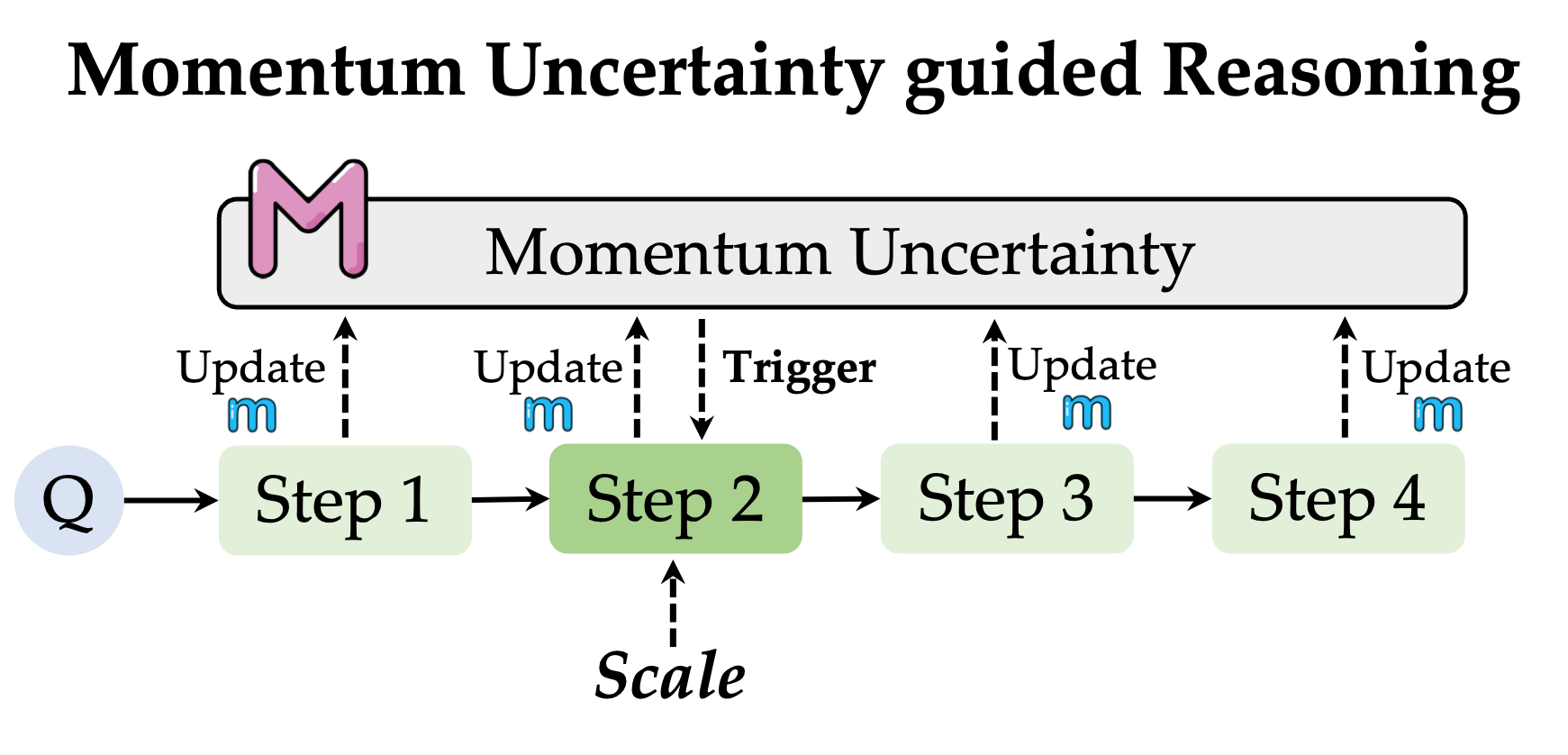

MUR: Momentum Uncertainty guided Reasoning for Large Language Models

Hang Yan*, Fangzhi Xu*✉, Rongman Xu, Yifei Li, Jian Zhang, Haoran Luo, Xiaobao Wu, Luu Anh Tuan, Haiteng Zhao✉, Qika Lin, Jun Liu✉

(* means equal contributions)

Genius: A Generalizable and Purely Unsupervised Self-Training Framework For Advanced Reasoning[CCF-A]

Fangzhi Xu, Hang Yan, Chang Ma, Haiteng Zhao, Qiushi Sun, Kanzhi Cheng, Junxian He, Jun Liu, Zhiyong Wu

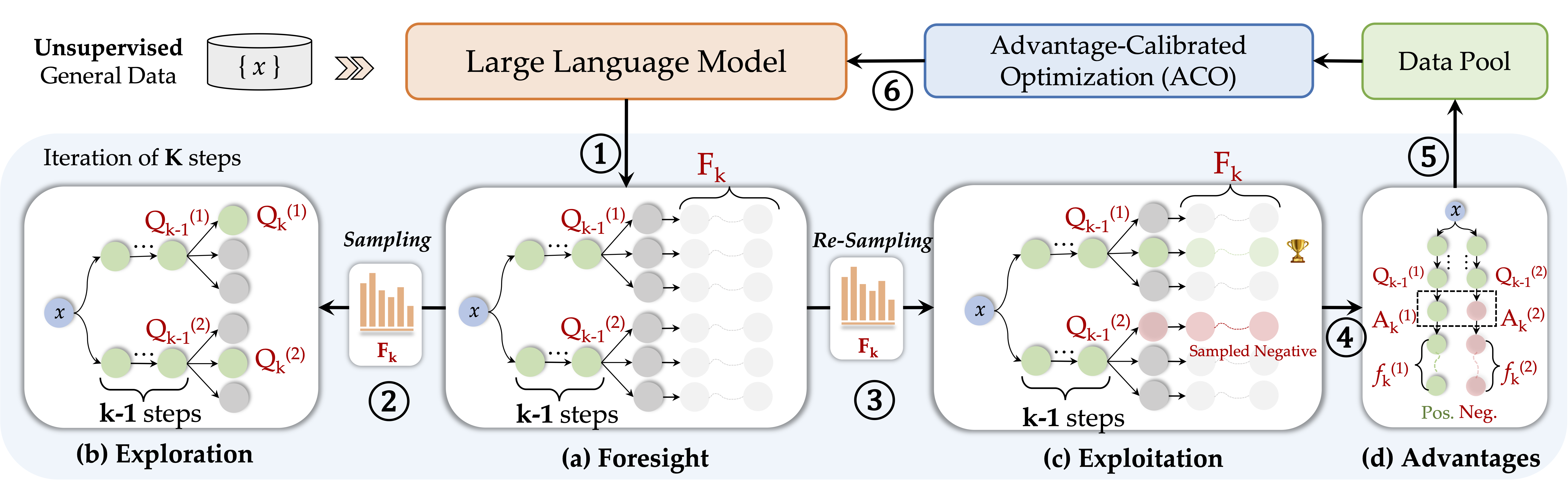

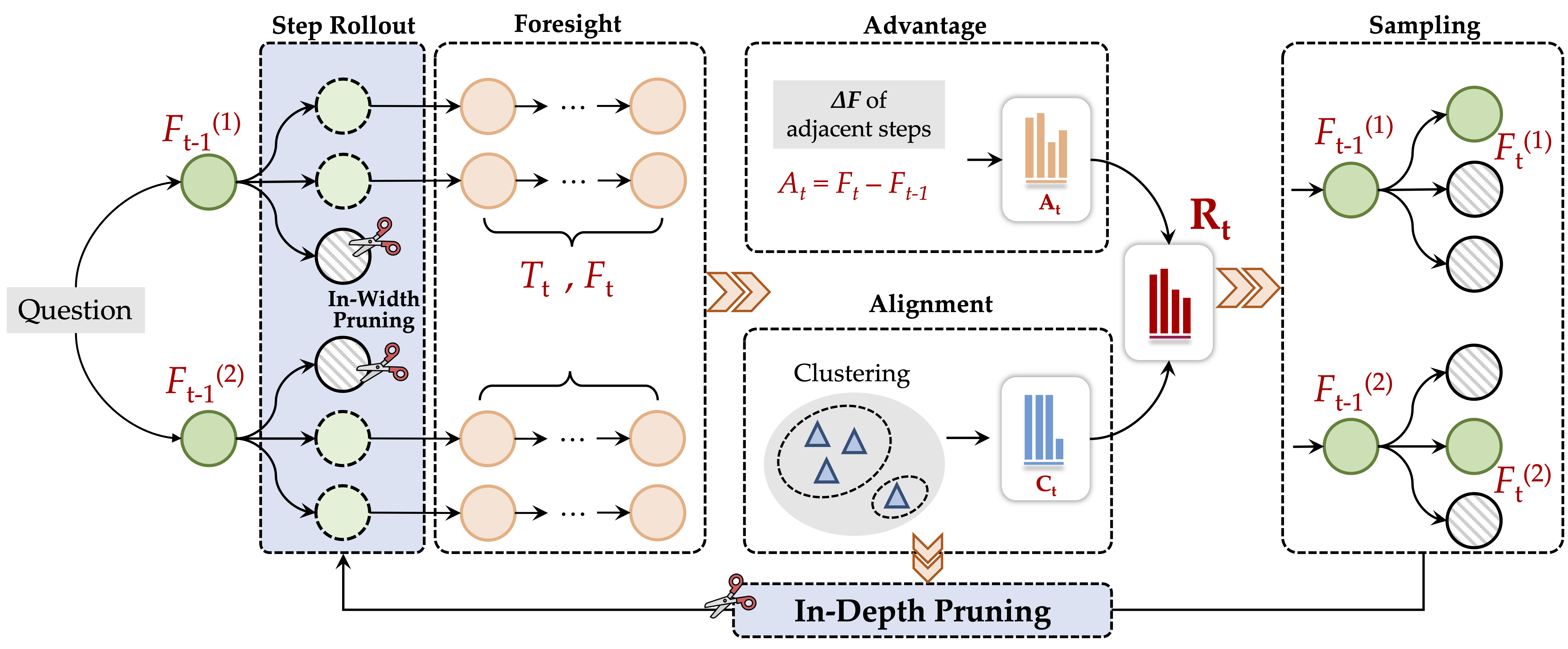

ϕ-Decoding: Adaptive Foresight Sampling for Balanced Inference-Time Exploration and Exploitation[CCF-A]

Fangzhi Xu*, Hang Yan*, Chang Ma, Haiteng Zhao, Jun Liu, Qika Lin, Zhiyong Wu

(* means equal contributions)

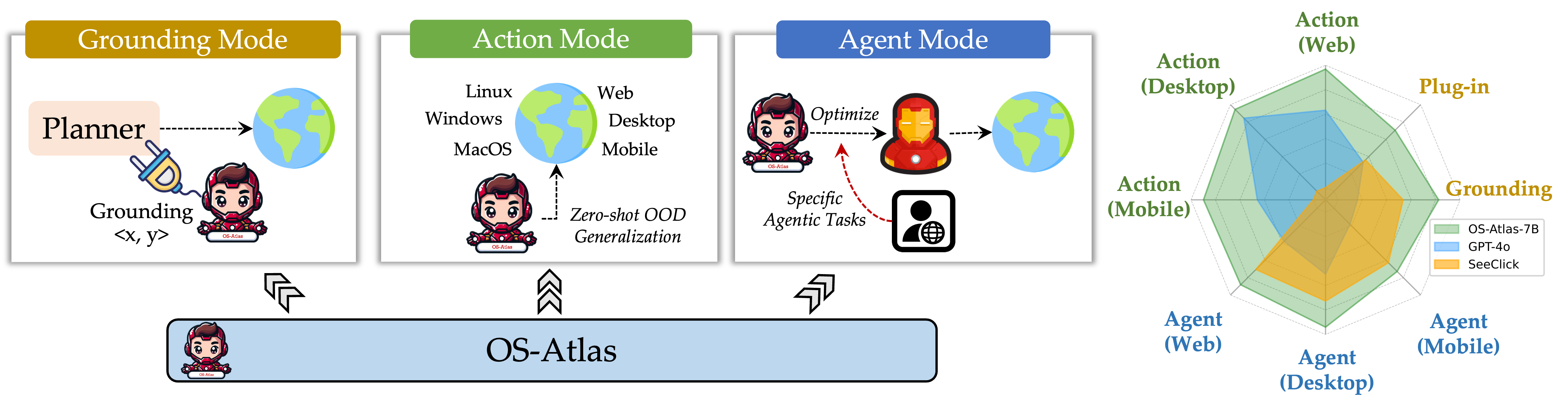

OS-ATLAS: A Foundation Action Model for Generalist GUI Agents

Zhiyong Wu*, Zhenyu Wu*, Fangzhi Xu*, Yian Wang*, Qiushi Sun, Chengyou Jia, Kanzhi Cheng, Zichen Ding, Liheng Chen, Paul Pu Liang, Yu Qiao

(* means equal contributions)

Interative Evolution: A Neural-symbolic Self-Training Framework for Large Language Models [CCF-A]

Fangzhi Xu, Qiushi Sun, Kanzhi Cheng, Jun Liu, Yu Qiao, Zhiyong Wu.

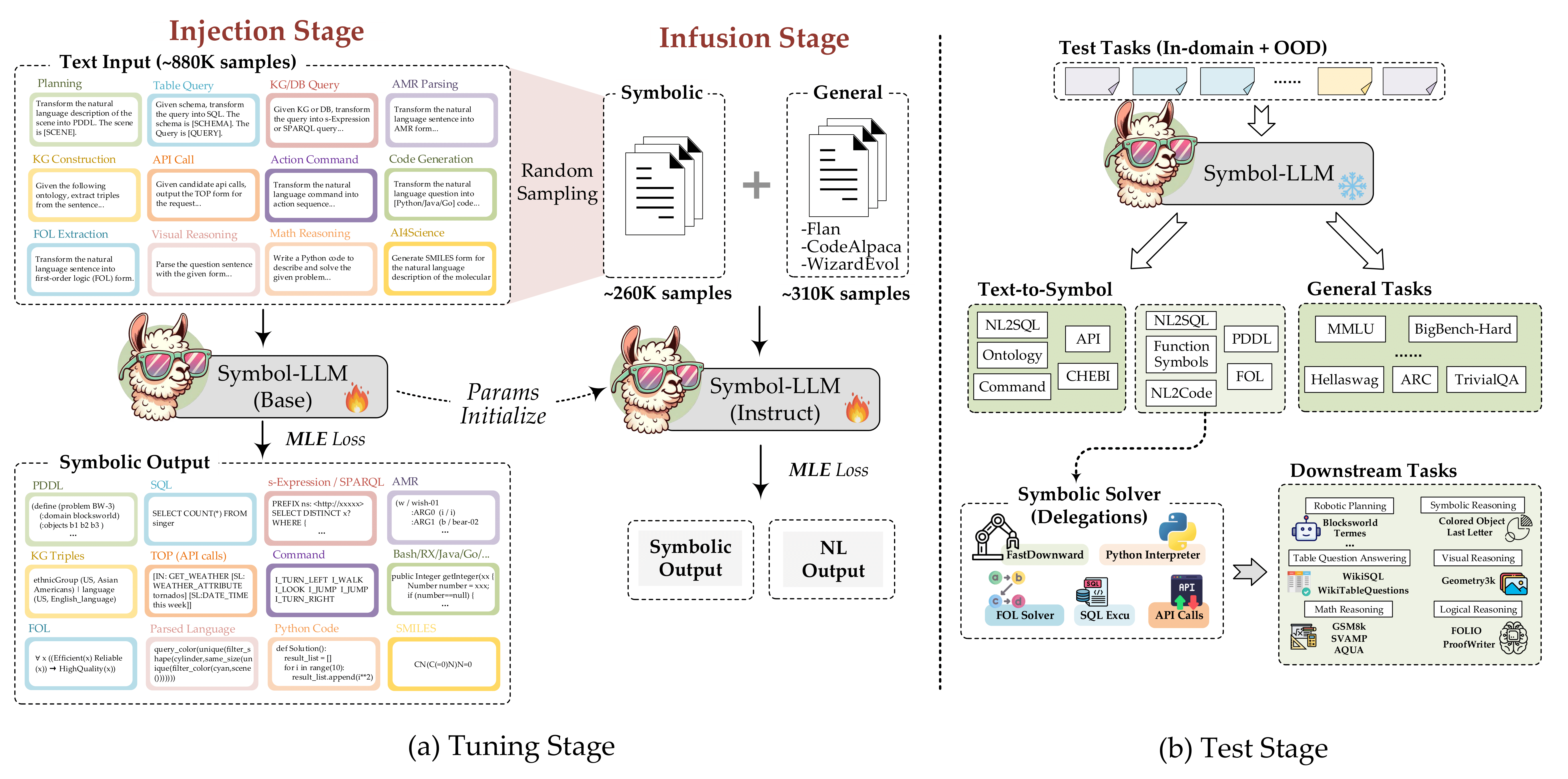

Symbol-LLM: Towards Foundational Symbol-centric Interface

For Large Language Models [CCF-A]

Fangzhi Xu, Zhiyong Wu, Qiushi Sun, Siyu Ren, Fei Yuan, Shuai Yuan, Qika Lin, Qiao Yu and Jun Liu.

PathReasoner: Modeling Reasoning Path with Equivalent Extension for Logical Question Answering [CCF-A]

Fangzhi Xu, Qika Lin, Tianzhe Zhao, Jiawei Han, Jun Liu

Are Large Language Models Really Good Logical Reasoners? A Comprehensive Evaluation and Beyond [CCF-A] (🏆 ESI Highly Cited)

Fangzhi Xu*, Qika Lin*, Jiawei Han, Tianzhe Zhao, Jun Liu and Erik Cambria

(* means equal contributions)

Logiformer: A Two-Branch Graph Transformer Network for Interpretable Logical Reasoning [CCF-A]

Fangzhi Xu, Jun Liu, Qika Lin, Yudai Pan and Lingling Zhang

🧑 Other Paper

ICLR 2026ScienceBoard: Evaluating Multimodal Autonomous Agents in Realistic Scientific Workflows

Qiushi Sun, Zhoumianze Liu, Chang Ma, Zichen Ding, Fangzhi Xu, Zhangyue Yin, Haiteng Zhao, Zhenyu Wu, Kanzhi Cheng, Zhaoyang Liu, Jianing Wang, Qintong Li, Xiangru Tang, Tianbao Xie, Xiachong Feng, Xiang Li, Ben Kao, Wenhai Wang, Biqing Qi, Lingpeng Kong, Zhiyong WuAAAI 2026MAPS: Multi-Agent Personality Shaping for Collaborative Reasoning [CCF-A]

Jian Zhang, Zhiyuan Wang, Zhangqi Wang, Xinyu Zhang, Fangzhi Xu, Qika Lin, Rui Mao, Erik Cambria, Jun LiuNeurIPS 2025ChartSketcher: Reasoning with Multimodal Feedback and Reflection for Chart Understanding [CCF-A]

Muye Huang, Lingling Zhang, Han Lai, Fangzhi Xu, Yifei Li, Wenjun Wu, Yaqiang Wu, Jun LiuPreprintBioMaze: Benchmarking and Enhancing Large Language Models for Biological Pathway Reasoning

Haiteng Zhao, Chang Ma, Fangzhi Xu, Lingpeng Kong, Zhi-Hong DengACL 2025 FindingsCapArena: Benchmarking and Analyzing Detailed Image Captioning in the LLM Era

Kanzhi Cheng, Wenpo Song, Jiaxin Fan, Zheng Ma, Qiushi Sun, Fangzhi Xu, Chenyang Yan, Nuo Chen, Jianbing Zhang, Jiajun ChenACL 2025OS-Genesis: Automating GUI Agent Trajectory Construction via Reverse Task Synthesis[CCF-A]

Qiushi Sun, Kanzhi Cheng, Zichen Ding, Chuanyang Jin, Yian Wang, Fangzhi Xu, Zhenyu Wu, Chengyou Jia, Liheng Chen, Zhoumianze Liu, Ben Kao, Guohao Li, Junxian He, Yu Qiao, Zhiyong WuACL 2025Self-supervised Quantized Representation for Seamlessly Integrating Knowledge Graphs with Large Language Models [CCF-A]

Qika Lin, Tianzhe Zhao, Kai He, Zhen Peng, Fangzhi Xu, Ling Huang, Jingying Ma, Mengling FengACL 2025 FindingsAgentStore: Scalable Integration of Heterogeneous Agents As Specialized Generalist Computer Assistant

Chengyou Jia, Minnan Luo, Zhuohang Dang, Qiushi Sun, Fangzhi Xu, Junlin Hu, Tianbao Xie, Zhiyong WuNAACL 2025 (Oral)Vision-Language Models Can Self-Improve Reasoning via Reflection [CCF-B]

Kanzhi Cheng, Yantao Li, Fangzhi Xu, Jianbing Zhang, Hao Zhou, Yang LiuPreprintA Survey of Neural Code Intelligence: Paradigms, Advances and Beyond

Qiushi Sun, Zhirui Chen, Fangzhi Xu, Chang Ma, Kanzhi Cheng, Zhangyue Yin, Jianing Wang, Chengcheng Han, Renyu Zhu, Shuai Yuan, Pengcheng Yin, Qipeng Guo, Xipeng Qiu, Xiaoli Li, Fei Yuan, Lingpeng Kong, Xiang Li, Zhiyong WuACL 2024SeeClick: Harnessing GUI Grounding for Advanced Visual GUI Agents [CCF-A]

Kanzhi Cheng, Qiushi Sun, Yougang Chu, Fangzhi Xu, Yantao Li, Jianbing Zhang, Zhiyong WuCOLING 2024A Semantic Mention Graph Augmented Model for Document-Level Event Argument Extraction [CCF-B]

Jian Zhang, Changlin Yang, Haiping Zhu, Qika Lin, Fangzhi Xu and Jun LiuACL 2023TECHS: Temporal Logical Graph Networks for Explainable Extrapolation Reasoning [CCF-A]

Qika Lin, Jun Liu, Rui Mao, Fangzhi Xu and Erik CambriaInformation FusionFusing Topology Contexts and Logical Rules in Language Models for Knowledge Graph Completion [SCI-Q1]

Qika Lin, Rui Mao, Jun Liu, Fangzhi Xu, Erik CambriaIEEE TNNLSMind Reasoning Manners: Enhancing Type Perception for Generalized Zero-shot Logical Reasoning over Text [CCF-B]

Fangzhi Xu, Jun Liu, Qika Lin, Tianzhe Zhao, Jian Zhang, Lingling ZhangAPWEB-WAIM 2022Incorporating Prior Type Information for Few-shot Knowledge Graph Completion [CCF-C] (Excellent Student Paper)

Siyu Yao, Tianzhe Zhao, Fangzhi Xu, Jun LiuSIGIR 2022Incorporating Context Graph with Logical Reasoning for Inductive Relation Prediction [CCF-A]

Qika Lin, Jun Liu, Fangzhi Xu, Yudai Pan, Yifan Zhu, Lingling Zhang and Tianzhe ZhaoPattern RecognitionMoCA: Incorporating Domain Pretraining and Cross Attention for Textbook Question Answering [CCF-B]

Fangzhi Xu, Qika Lin, Jun Liu, Lingling Zhang, Tianzhe Zhao, Qi Chai, Yudai PanIEEE TKDEContrastive Graph Representations for Logical Formulas Embedding [CCF-A]

Qika Lin, Jun Liu, Lingling Zhang, Yudai Pan, Xin Hu, Fangzhi Xu, Hongwei Zeng

🎖 Honors and Awards

Honors

- Oct.2022 Outstanding Student in Xi’an Jiaotong University

- Jun.2021 Outstanding Undergraduate Graduates in Shaanxi Province

- Sep.2020 Top-10 Students of the Year (Top Personal Award in Xi’an Jiaotong University)

- Sep.2019 Outstanding Student in Xi’an Jiaotong University

- Sep.2018 Outstanding Student in Xi’an Jiaotong University

Competition Awards

- Dec.2022 ”Huawei Cup” Mathematical Contest in Modeling, Second Prize

- Mar.2022 Asia and Pacific Mathematical Contest in Modeling (APMCM), First Prize + Innovation Award (Top-2)

- Dec.2021 ”Huawei Cup” Mathematical Contest in Modeling, Second Prize

- Sep.2020 IKCEST International Big Data Competition, Excellence Award

- Apr.2020 Mathematical Contest in Modeling (COMAP), Finalist

- Dec.2019 China Mathematical Contest in Modeling (CUMCM), Second Prize

- May.2018 National English Competition for College Students (NECCS), Second Prize

🎖 Scholarships

- 2025.09 National Scholarship

- 2025.09 Tencent Scholarship (First-Class)

- 2023.09 Freshman First Prize Scholarship (PhD)

- 2022.09 National Scholarship

- 2022.09 Huawei Scholarship

- 2022.04 ACM SIGIR Student Travel Grant

- 2021.09 Freshman First Prize Scholarship (Master)

- 2020.09 Chinese Modern Scientists Scholarship (Only for Top-10 Student)

- 2019.09 National Scholarship

- 2018.09 National Scholarship

💬 Academic Services

- Program Committee Member: ICML 2026, ACL 2026, ICLR 2026, EMNLP 2025, ACL 2025, ACL 2024, IJCAI 2024, SIGIR 2024, SIGIR 2023, SIGIR-AP 2023

- Reviewer: IEEE TNNLS